Posts tagged security

Page Content

Security Through Obscurity

This was originally written for Dave Farber’s IP list.

I take some of the blame for helping to spread “no security through obscurity,” first with some talks on COPS (developed with Dan Farmer) in 1990, and then in the first edition of Practical Unix Security (with Simson Garfinkel) in 1991. None of us originated the term, but I know we helped popularize it with those items.

The origin of the phrase is arguably from one of Kerckhoff’s principles for strong cryptography: that there should be no need for the cryptographic algorithm to be secret, and it can be safely disclosed to your enemy. The point there is that the strength of a cryptographic mechanism that depends on the secrecy of the algorithm is poor; to use Schneier’s term, it is brittle: Once the algorithm is discovered, there is no (or minimal) protection left, and once broken it cannot be repaired. Worse, if an attacker manages to discover the algorithm without disclosing that discovery then she can exploit it over time before it can be fixed.

The mapping to OS vulnerabilities is somewhat analogous: if your security depends only (or primarily) on keeping a vulnerability secret, then that security is brittle—once the vulnerability is disclosed, the system becomes more vulnerable. And, analogously, if an attacker knows the vulnerability and hides that discovery, he can exploit it when desired.

However, the usual intent behind the current use of the phrase “security through obscurity” is not correct. One goal of securing a system is to increase the work factor for the opponent, with a secondary goal of increasing the likelihood of detecting when an attack is undertaken. By that definition, obscurity and secrecy do provide some security because they increase the work factor an opponent must expend to successfully attack your system. The obscurity may also help expose an attacker because it will require some probing to penetrate the obscurity, thus allowing some instrumentation and advanced warning.

In point of fact, most of our current systems have “security through obscurity” and it works! Every potential vulnerability in the codebase that has yet to be discovered by (or revealed to) someone who might exploit it is not yet a realized vulnerability. Thus, our security (protection, actually) is better because of that “obscurity”! In many (most?) cases, there is little or no danger to the general public until some yahoo publishes the vulnerability and an exploit far and wide.

Passwords are a form of secret (obscurity) that provide protection. Classifying or obfuscating a codebase can increase the work factor for an attacker, thus providing additional security. This is commonly used in military systems and commercial trade secrets, whereby details are kept hidden to limit access and increase workfactor for an attacker.

The problem occurs when a flaw is discovered and the owners/operators attempt to maintain (indefinitely) the sanctity of the system by stopping disclosure of the flaw. That is not generally going to work for long, especially in the face of determined foes. The owners/operators should realize that there is no (indefinite) security in keeping the flaw secret.

The solution is to design the system from the start so it is highly robust, with multiple levels of protection. That way, a discovered flaw can be tolerated even if it is disclosed, until it is fixed or otherwise protected. Few consumer systems are built this way.

Bottom line: “security through obscurity” actually works in many cases and is not, in itself, a bad thing. Security for the population at large is often damaged by the people who claim to be defending the systems by publishing the flaws and exploits trying to force fixes. But vendors and operators (and lawyers) should not depend on secrecy as primary protection.

Complexity, virtualization, security, and an old approach

[tags]complexity,security,virtualization,microkernels[/tags]

One of the key properties that works against strong security is complexity. Complexity poses problems in a number of ways. The more complexity in an operating system, for instance, the more difficult it is for those writing and maintaining it to understand how it will behave under extreme circumstances. Complexity makes it difficult to understand what is needed, and thus to write fault-free code. Complex systems are more difficult to test and prove properties about. Complex systems are more difficult to properly patch when faults are found, usually because of the difficulty in ensuring that there are no side-effects. Complex systems can have backdoors and trojan code implanted that is more difficult to find because of complexity. Complex operations tend to have more failure modes. Complex operations may also have longer windows where race conditions can be exploited. Complex code also tends to be bigger than simple code, and that means more opportunity for accidents, omissions and manifestation of code errors.

It is simple that complexity creates problems.

Saltzer and Schroeder identified it in their 1972 paper in CACM. They referred to “economy of mechanism” as their #1 design principle for secure systems.

Some of the biggest problems we have now in security (and arguably, computing) are caused by “feature creep” as we continue to expand systems to add new features. Yes, those new features add new capabilities, but often the additions are foisted off on everyone whether they want them or not. Thus, everyone has to suffer the consequences of the next exapnded release of Linux, Windows (Vista), Oracle, and so on. Many of the new features are there as legitimate improvements for everyone, but some are of interest to only a minority of users, and others are simply there because the designers thought they might be nifty. And besides, why would someone upgrade unless there were lots of new features?

Of course, this has secondary effects on complexity in addition to the obvious complexity of a system with new features. One example has to do with backwards compatibility. Because customers are unlikely to upgrade to the new, improved product if it means they have to throw out their old applications and data, the software producers need to provide extra code for compatibility with legacy systems. This is not often straight-forward—it adds new complexity.

Another form of complexity has to do with hardware changes. The increase in software complexity has been one motivating factor for hardware designers, and has been for quite some time. Back in the 1960s when systems began to support time sharing, virtual memory became a necessity, and the hardware mechanisms for page and segment tables needed to be designed into systems to maintain reasonable performance. Now we have systems with more and more processes running in the background to support the extra complexity of our systems, so designers are adding extra processing cores and support for process scheduling.

Yet another form of complexity is involved with the user interface. The typical user (and especially the support personnel) now have to master many new options and features, and understand all of their interactions. This is increasingly difficult for someone of even above-average ability. It is no wonder that the average home user has myriad problems using their systems!

Of course, the security implications of all this complexity have been obvious for some time. Rather than address the problem head-on by reducing the complexity and changing development methods (e.g., use safer tools and systems, with more formal design), we have recently seen a trend towards virtualization. The idea is that we confine our systems (operating systems, web services, database, etc) in a virtual environment supported by an underlying hypervisor. If the code breaks…or someone breaks it…the virtualization contains the problems. At least, in theory. And now we have vendors providing chipsets with even more complicated instruction sets to support the approach. But this is simply adding yet more complexity. And that can’t be good in the long run. Already attacks have been formulated to take advantage of these added “features.”

We lose many things as we make systems more complex. Besides security and correctness, we also end up paying for resources we don’t use. And we are also paying for power and cooling for chips that are probably more powerful than we really need. If our software systems weren’t doing so much, we wouldn’t need quite so much power “under the hood” in the hardware.

Although one example is hardly proof of this general proposition, consider the results presented in 86 Mac Plus Vs. 07 AMD DualCore. A 21-year old system beat a current top-of-the-line system on the majority of a set of operations that a typical user might perform during a work session. On your current system, do a “ps” or run the task manager. How many of those processes are really contributing to the tasks you want to carry out? Look at the memory in use—how much of what is in use is really needed for the tasks you want to carry out?

Perhaps I can be accused of being a reactionary ( a nice word meaning “old fart:”), but I remember running Unix in 32K of memory. I wrote my first full-fledged operating system with processes, a file system, network and communication drivers, all in 40K. I remember the community’s efforts in the 1980s and early 1990s to build microkernels. I remember the concept of RISC having a profound impact on the field as people saw how much faster a chip could be if it didn’t need to support complexity in the instruction set. How did we get from there to here?

Perhaps the time is nearly right to have another revolution of minimalism. We have people developing low-power chips and tiny operating systems for sensor-based applications. Perhaps they can show the rest of us some old ideas made new.

And for security? Well, I’ve been trying for several years to build a system (Poly^2) that minimalizes the OS to provide increased security. To date, I haven’t had much luck in getting sufficient funding to really construct a proper prototype; I currently have some funding from NSF to build a minimal version, but the funding won’t allow anything close to a real implementation. What I’m trying to show is too contrary to conventional wisdom. It isn’t of interest to the software or hardware vendors because it is so contrary to their business models, and the idea is so foreign to most of the reviewers at funding agencies who are used to build ever more complex systems.

Imagine a system with several dozen (or hundred) processor cores. Do we need process scheduling and switching support if we have a core for each active process? Do we need virtual memory support if we have a few gigabytes of memory available per core? Back in the 1960s we couldn’t imagine such a system, and no nation or company could afford to build one. But now that wouldn’t even be particularly expensive compared to many modern systems. How much simpler, faster, and more secure would such a system be? In 5 years we may be able to buy such a system on a single chip—will we be ready to use it, or will we still be chasing 200 million line operating systems down virtual rat holes?

So, I challenge my (few) readers to think about minimalism. If we reduce the complexity of our systems what might we accomplish? What might we achieve if we threw out the current designs and started over from a new beginning and with our current knowledge and capabilities?

[Small typo fixed 6/21—thanks cfr]

Copyright © 2007 by E. H. Spafford

[posted with ecto]

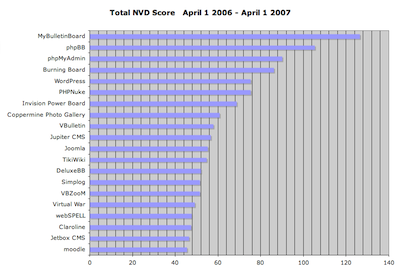

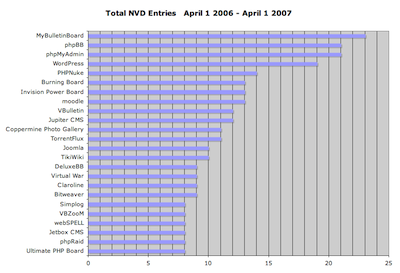

The PHP App Insecurity Top 20

I’ve spent some of my down time in the past couple weeks working with the NIST NVD data to get stats on PHP application vulnerabilities. What follows is a breakdown of the 20 PHP-based applications that had the highest aggregate vulnerability scores (NIST assigns a score from 1-10 for the severity of each entry), and the highest total number of vulnerabilities, over the past 12 months. Of the two, I feel that the aggregate score is a better indicator of security issues.

A few caveats:

- The data here covers the period between April 1 2006 and April 1 2007.

- This obviously only includes reported vulnerabilities. There are surely a lot more applications that are very insecure, but for one reason or another haven’t had as many reports.

- I chose 20 as the cutoff mainly for the sake of making the data a little easier to swallow (and chart nicely). There are about 1,800 distinct apps in the NIST NVD that are (as far as I could determine) PHP-based.

Without further ado, here are the tepid Excel charts:

A couple notes:

- There are 25 entries in the top “20” by vulnerability count, due to matching vulnerability counts.

- I’d never even heard of MyBulletinBoard, the top entry in both lists. It hasn’t had any vulnerabilities in the NVD since September of 2006, which says something about how numerous and severe the entries between April and September 2006 were. This appears to be the same product as “MyBB,” so perhaps the situation has improved, as MyBB only has one NVD entry in the entire period (CVE-2007-0544).

- Wordpress has had a bad start to 2007, with numerous vulnerabilities that significantly increased its ranking. March 2007 was particularly bad, with 7 new vulnerabilities reported.

- Bulletin board/forum software is by far the most common type of application in the top 20. A couple forum apps that have very low numbers of vulnerability reports: Vanilla and FUDForum.

I do intend to keep this data up-to-date if people find it interesting, so let me know if you’d like me to do so, or if you’d like to see other types of analysis.

[tags]php, security, application security, vulnerabilities, nist, nvd, statistics[/tags]

What security push?

[tags]Vista, Windows, security,flaws,Microsoft[/tags]

Update: additions added 4/19 and 4/24, at the end.

Back in 2002, Microsoft performed a “security standdown” that Bill Gates publicly stated cost the company over $100 million. That extreme measure was taken because of numerous security flaws popping up in Microsoft products, steadily chipping away at MS’s reputation, customer safety, and internal resources. (I was told by one MS staffer that response to major security flaws often cost close to $1 million each for staff time, product changes, customer response, etc. I don’t know if that is true, but the reality certainly was/is a substantial number.)

Without a doubt, people inside Microsoft took the issue seriously. They put all their personnel through a security course, invested heavily in new testing technologies, and even went so far as to convene an advisory board of outside experts (the TCAAB)—including some who have not always been favorably disposed towards MS security efforts. Security of the Microsoft code base suddenly became a Very Big Deal.

Fast forward 5 years: When Vista was released a few months ago, we saw lots of announcements that it was the most secure version of Windows ever, but that metric was not otherwise qualified; a cynic might comment that such an achievement would not be difficult. The user population has become habituated to the monthly release of security patches for existing products, with the occasional emergency patch. Bundling all the patches together undoubtedly helps reduce the overhead in producing them, but also serves to obscure how many different flaws are contained inside each patch set. The number of flaws maybe hasn’t really decreased all that much from years ago.

Meanwhile, reports from inside MS indicate that there was no comprehensive testing of personnel to see how the security training worked and no follow-on training. The code base for new products has continued to grow, thus opening new possibilities for flaws and misconfiguration. The academic advisory board may still exist, but I can’t find a recent mention of it on the Microsoft web pages, and some of the people I know who were on it (myself included) were dismissed over a year ago. The external research program at MSR that connected with academic institutions doing information security research seems to have largely evaporated—the WWW page for the effort lists John Spencer as contact, and he retired from Microsoft last year. The upcoming Microsoft Research Faculty Summit has 9 research tracks, and none of them are in security.

Microsoft seems to project the attitude that they have solved the security problem.

If that’s so, why are we still seeing significant security flaws appear that not only affect their old software, but their new software written under the new, extra special security regime, such as Vista and Longhorn? Examples such as the ANI flaw and the recent DNS flaw are both glaring examples of major problems that shouldn’t have been in the current code: the ANI flaw is very similar to a years-old flaw that was already known inside Microsoft, and the DNS flaw is another buffer overflow!! There are even reports that there may be dozens (or hundreds) of patches awaiting distribution for Vista.

Undoubtedly, the $100 million spent back in 2002 was worth something—the code quality has definitely improved. There is greater awareness inside Microsoft about security and privacy issues. I also know for a fact that there are a lot of bright, talented and very motivated people inside Microsoft who care about these issues. But questions remain: did Microsoft get its money’s worth? Did it invest wisely and if so, why are we still seeing so many (and so many silly) security flaws? Why does it seem that security is no longer a priority? What does that portend for Vista, Longhorn, and Office 2007? (And if you read the “standdown” article, one wonders also about Mr. Nash’s posterior. ![]() )

)

I have great respect for many of the things Microsoft has done, and admiration for many of the people who work there. I simply wish they had some upper management who would realize that security (and privacy) are ongoing process needs, not one-time problems to overcome with a “campaign.”

What do you think?

[posted with ecto]

Update 4/19: The TCAAB does still continue to exist, apparently, but with a greater focus on privacy issues than security. I do not know who the current members might be.

Update 4/24: I have heard (informally) from someone inside Microsoft in informal response to this post. He pointed out several issues that I think are valid and deserve airing here;

- Security training of personnel is on-going. It still is unclear to me whether they are employing good educational methods, including follow-up testing, to optimize their instruction.

- The TCABB does indeed continue (and was meeting when I made the original post!). It has undergone some changes since it was announced, but is largely the same as when it was formed. What they are doing, and what effect they are having (if any), is unclear.

- Microsoft’s patch process is much smoother now, and bundled patches are easier to apply than lots of individual ones. (However, there are still a lot of patches for things that shouldn’t be in the code.)

- The loss of outreach to academia by MSR does not imply they aren’t still doing research in security issues.

Many of my questions still remain unanswered, including Mr. Nash’s condition….

Configuration: the forgotten side of security

I was interviewed for an article, Configuration: the forgotten side of security, about proactive security. I am a big believer in proactive security. However, I do not discount the need for reactive security. In the email interview I stated the following:

I define proactive security as a method of protecting information and resources through proper design and implementation to reduce the need for reactive security measures. In contrast, reactive security is a method of remediation and correction used when your proactive security measures fail. The two are interdependent.

I was specifically asked for best practices on setting up UNIX/Linux systems. My response was to provide some generic goals for configuring systems, which surprisingly made it into the article. I avoided listing specific tasks or steps because those change over time and vary based on the systems used. I have written a security configuration guide or two in my time, so I know how quickly they become out of date. Here are the goals again:

The five basic goals of system configuration:

- Build for a specific purpose and only include the bare minimum needed to accomplish the task.

- Protect the availability and integrity of data at rest.

- Protect the confidentiality and integrity of data in motion.

- Disable all unnecessary resources.

- Limit and record access to necessary resources.

In all, the most exciting aspect is that I was quoted in an article alongside Prof. Saltzer. That’s good company to have.

Web App Security - The New Battlefront

Well, we’re all pretty beat from this year’s Symposium, but things went off pretty well. Along with lots of running around to make sure posters showed up and stuff, I was able to give a presentation called Web Application Security - The New Battlefront. People must like ridiculous titles like that, because turnout was pretty good. Anyway, I covered the current trend away from OS attacks/vandalism and towards application attacks for financial gain, which includes web apps. We went over the major types of attacks, and I introduced a brief summary of what I feel needs to be done in the education, tool development, and app auditing areas to improve the rather poor state of affairs. I’ll expand on these topics more in the future, but you can see my slides and watch the video for now:

Useful Firefox Security Extensions

Mozilla’s Firefox browser claims to provide a safer browsing experience out of the box, but some of the best security features of Firefox are only available as extensions. Here’s a roundup of some of the more useful ones I’ve found.

- Add n’ Edit Cookies This might be more of a web developer tool, but being able to view in detail the cookies that various sites set on your visits can be an eye-opening experience. This extension not only shows you all the details, but lets you modify them too. You’ll be surprised at how many web apps do foolish things like saving your password in the cookie.

- Dr. Web Anti-Virus Link Checker This is an interesting idea—scanning files for viruses before you download them. Basically, this extension adds an option to the link context menu that allows you to pass the link to the Dr. Web AV service. I haven’t rigorously tested this or anything, but it’s an interesting concept that could be part of an effective multilayer personal security model.

- FormFox This extension doesn’t do a whole lot, but what it does is important—showing a tooltip when you roll over a form submission button of the form action URL. Extending this further to visually differentiate submission buttons that submit to SSL URLs would be really nice (as suggested by Chris Shiflett).

- FlashBlock Flash hasn’t been quite as popular an attack vector as Javascript, but it still potentially could be a threat, and it’s often an annoyance. This extension disables all embedded Flash elements by default (score one for securing things by default), allowing you to click to activate a particular one if you like. It lacks the flexibility I’d like (things like whitelists would be very handy), and doesn’t give you much (any?) info about the Flash element before you run it, but it’s still a handy tool.

- LiveHTTPHeaders & Header Monitor LiveHTTPHeaders is an incredibly useful too for web developers, displaying all of the header traffic between the client and server. Header Monitor is basically an add-on for LiveHTTPHeaders that displays a chosen header in Firefox’s status bar. They’re not really specifically security tools, but they do offer a lot of info on what’s really going on when you’re browsing, and an educated user is a safer user.

- JavaScript Option This restores some of the granularity Firefox users used to have over what Javascript can and cannot do. I’d like to see this idea taken farther (see below), but it’s handy regardless.

- NoScript This extension is pretty smooth. Of all the addons for Firefox covered here, this is the one to get. NoScript is a powerful javascript execution whitelisting tool, allowing full user control over what domains allow scripts to run. Notifications of blocked execution and the allowed domain interface are nearly identical to the built-in Firefox popup blocker, so users should find it comfortable to work with. NoScript can also block Flash, Java, and “other plugins;” forbid bookmarklets; block or allow the “ping” attribute of the tag; and attempt to rewrite links that execute javascript to go to their intended donation without triggering the script code. The one thing I’d really like to see from this extension would be more ganularity over what the Javascript engine can access. Now it’s only “on” or “off,” but being able to disable things like cookie access would eliminate a lot of potential security issues while still letting JS power rich web app interfaces. Also read Pascal Meunier’s take on NoScript.

- QuickJava Places handy little buttons in the status bar that let you quickly enable or disable Java or Javascript support. Note that this will not work with the latest stable Firefox (1.5.0.1). Hopefully a new version will be available soon.

- ShowIP This is another tool that isn’t aimed at security per se, but offers a lot of useful information. ShowIP drops the IP address of the current site in your status bar. Clicking on it brings up a menu of lookup options for the IP, like whois and DNS info. You can add additional web lookups if you like, as well as passing the IP to a local program. Handy stuff.

- SpoofStick The idea with this extension is to make it easier to catch spoofing attempts by displaying a very large, brightly colored “You’re on ” in the toolbar. For folks who know what they’re doing this isn’t wildly useful, but it could be just the ticket for less savvy users. It requires a bit too much setup for them, though, and in the end I think this is something the browser itself should be handling.

- Tamper Data Much like LiveHTTPHeaders, Tamper Data is a very useful extension for web devs that lets the user view HTTP headers and POST data passed between the client and server. In addition, Tamper Data makes it easy for the user to alter the data being sent to the server, which is enormously useful for doing security testing against web apps. I also like how the data is presented in TD a bit better than LiveHTTPHeaders: it’s easier to see at a glance all of the traffic and get an overall feel of what’s going on, but you can still drill down and get as much detail as you like.

Got more Firefox security extensions? Leave a comment and I’ll collect them in an upcoming post.